Dev C++ Debug Watch Array

Why are my files corrupted on format?

If not, you can view an array for C and C# by putting it in the watch window in the debugger, with its contents visible when you expand the array on the little (+) in the watch window by a left mouse-click. If it's a pointer to a dynamically allocated array, to view N contents of the pointer, type 'pointer, N' in the watch window of the debugger.

- Trick here: Say you have an std::vectorint v; and you want to see in the watch v23 or maybe v23.v23+n do this: Add the variable to the watch windows. After the name of the variable (ex: v,!) this indicate VS that you want to turn off debugger visualization.

- Sep 18, 2009 Debugging with DevC.

Files can be corrupted due to the fact that you either have a multi-root workspace where one folder is a child of the other, or you are using symlinks to open your file. Reduce the folders in the workspace to one and remove the symlink. This should fix your problem.

How do I get IntelliSense to work correctly?

Without any configuration, the extension will attempt to locate headers by searching your workspace folder and by emulating a compiler it finds on your computer. (for example cl.exe/WSL/MinGW for Windows, gcc/clang for macOS/Linux). If this automatic configuration is insufficient, you can modify the defaults by running the C/C++: Edit Configurations (UI) command. In that view, you can change the compiler you wish to emulate, the paths to include files you wish to use, preprocessor definitions, and more.

Or, if you install a build system extension that interfaces with our extension, you can allow that extension to provide the configurations for you. For example, the CMake Tools extension can configure projects that use the CMake build system. Use the C/C++: Change Configuration Provider.. command to enable any such extension to provide the configurations for IntelliSense.

A third option for projects without build system extension support is to use a compile_commands.json file if your build system supports generating this file. In the 'Advanced' section of the Configuration UI, you can supply the path to your compile_commands.json and the extension will use the compilation information listed in that file to configure IntelliSense.

Note: If the extension is unable to resolve any of the #include directives in your source code, it will not show linting information for the body of the source file. If you check the Problems window in VS Code, the extension will provide more information about which files it was unable to locate. If you want to show the linting information anyway, you can change the value of the C_Cpp.errorSquiggles setting.

Why do I see red squiggles under Standard Library types?

The most common reason for this is missing include paths and defines. The easiest way to fix this on each platform is as follows:

Linux/Mac: Set intelliSenseMode': 'clang-x64 or intelliSenseMode': 'gcc-x64 and compilerPath in c_cpp_properties.json to the path to your compiler.

Windows: If you are using the Microsoft C++ compiler, set intelliSenseMode': 'msvc-x64, but don't add the compilerPath property to c_cpp_properties.json. If you are using Clang for Windows, set intelliSenseMode': 'msvc-x64, and compilerPath in c_cpp_properties.json to the path to your compiler.

How do I get the new IntelliSense to work with MinGW on Windows?

See Get Started with C++ and Mingw-w64 in Visual Studio Code.

How do I get the new IntelliSense to work with the Windows Subsystem for Linux?

See Get Started with C++ and Windows Subsystem for Linux in Visual Studio Code.

What is the difference between includePath and browse.path?

These two settings are available in c_cpp_properties.json and can be confusing.

includePath

This array of path strings is used by the 'Default' IntelliSense engine. This new engine provides semantic-aware IntelliSense features and will be the eventual replacement for the Tag Parser that has been powering the extension since it was first released. It currently provides tooltips and error squiggles in the editor. The remaining features (for example, code completion, signature help, Go to Definition, ..) are implemented using the Tag Parser's database, so it is still important to ensure that the browse.path setting is properly set.

The paths that you specify for this setting are the same paths that you would send to your compiler via the -I switch. When your source files are parsed, the IntelliSense engine will prepend these paths to the files specified by your #include directives while attempting to resolve them. These paths are not searched recursively.

browse.path

This array of path strings is used by the 'Tag Parser' ('browse engine'). This engine will recursively enumerate all files under the paths specified and track them as potential includes while tag parsing your project folder. To disable recursive enumeration of a path, you can append a /* to the path string.

When you open a workspace for the first time, the extension adds ${workspaceRoot} to both arrays. If this is undesirable, you can open your c_cpp_properties.json file and remove it.

How do I recreate the IntelliSense database?

Dev C++ Debug Watch Array Online

Starting in version 0.12.3 of the extension, there is a command to reset your IntelliSense database. Open the Command Palette (⇧⌘P (Windows, Linux Ctrl+Shift+P)) and choose the C_Cpp: Reset IntelliSense Database command.

What is the ipch folder?

The language server caches information about included header files to improve the performance of IntelliSense. When you edit C/C++ files in your workspace folder, the language server will store cache files in the ipch folder. By default, the ipch folder is stored under the user directory. Specifically, it is stored under %LocalAppData%/Microsoft/vscode-cpptools on Windows, and for Linux and macOS it is under ~/.vscode-cpptools. By using the user directory as the default path, it will create one cache location per user for the extension. As the cache size limit is applied to a cache location, having one cache location per user will limit the disk space usage of the cache to that one folder for everyone using the default setting value.

VS Code per-workspace storage folders were not used because the location provided by VS Code is not well known and we didn't want to write GB's of files where users may not see them or know where to find them.

With this in mind, we knew that we would not be able to meet the needs of every different development environment, so we provided settings to allow you to customize the way that works best for your situation.

'C_Cpp.intelliSenseCachePath': <string>

This setting allows you to set workspace or global overrides for the cache path. For example, if you want to share a single cache location for all workspace folders, open the VS Code settings, and add a User setting for IntelliSense Cache Path.

'C_Cpp.intelliSenseCacheSize': <number>

This setting allows you to set a limit on the amount of caching the extension does. This is an approximation, but the extension will make a best effort to keep the cache size as close to the limit you set as possible. If you are sharing the cache location across workspaces as explained above, you can still increase/decrease the limit, but you should make sure that you add a User setting for IntelliSense Cache Size.

How do I disable the IntelliSense cache (ipch)?

If you do not want to use the IntelliSense caching feature that improves the performance of IntelliSense, you can disable the feature by setting the IntelliSense Cache Size setting to 0 (or 'C_Cpp.intelliSenseCacheSize': 0' in the JSON settings editor).

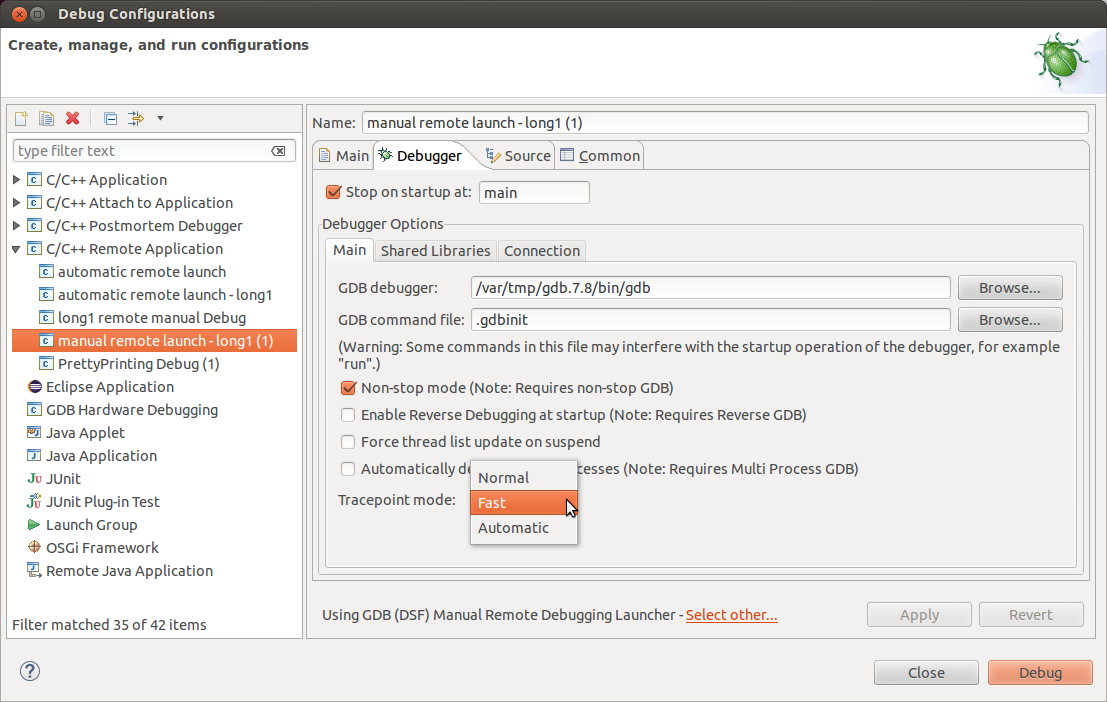

How do I set up debugging?

The debugger needs to be configured to know which executable and debugger to use:

From the main menu, select Run > Add Configuration...

The file launch.json will now be open for editing with a new configuration. The default settings will probably work except that you need to specify the program setting.

See Debug configuration for more in-depth documentation on how to configure the debugger.

How do I enable debug symbols?

Enabling debug symbols is dependent on the type of compiler you are using. Below are some of the compilers and the compiler options necessary to enable debug symbols.

When in doubt, please check your compiler's documentation for the options necessary to include debug symbols in the output. This may be some variant of -g or --debug.

Clang (C++)

- If you invoke the compiler manually, add the

--debugoption. - If you're using a script, make sure the

CXXFLAGSenvironment variable is set. For example,export CXXFLAGS='${CXXFLAGS} --debug'. - If you're using CMake, make sure the

CMAKE_CXX_FLAGSis set. For example,export CMAKE_CXX_FLAGS=${CXXFLAGS}.

Clang (C)

See Clang C++ but use CFLAGS instead of CXXFLAGS.

gcc or g++

If you invoke the compiler manually, add the -g option.

cl.exe

Symbols are located in the *.pdb file.

Why is debugging not working?

My breakpoints aren't being hit

When you start debugging, if your breakpoints aren't bound (solid red circle) or they are not being hit, you may need to enable debug symbols during compilation.

Debugging starts but all the lines in my stack trace are grey

If your debugger is showing a grey stacktrace, won't stop at a breakpoint, or the symbols in the call stack are grey, then your executable was compiled without debug symbols.

-->This topic demonstrates how to debug an application that uses C++ Accelerated Massive Parallelism (C++ AMP) to take advantage of the graphics processing unit (GPU). It uses a parallel-reduction program that sums up a large array of integers. This walkthrough illustrates the following tasks:

Launching the GPU debugger.

Inspecting GPU threads in the GPU Threads window.

Using the Parallel Stacks window to simultaneously observe the call stacks of multiple GPU threads.

Using the Parallel Watch window to inspect values of a single expression across multiple threads at the same time.

Flagging, freezing, thawing, and grouping GPU threads.

Executing all the threads of a tile to a specific location in code.

Prerequisites

Before you start this walkthrough:

Read C++ AMP Overview.

Make sure that line numbers are displayed in the text editor. For more information, see How to: Display Line Numbers in the Editor.

Make sure you are running at least Windows 8 or Windows Server 2012 to support debugging on the software emulator.

Note

Your computer might show different names or locations for some of the Visual Studio user interface elements in the following instructions. The Visual Studio edition that you have and the settings that you use determine these elements. For more information, see Personalizing the IDE.

To create the sample project

The instructions for creating a project vary depending on which version of Visual Studio you are using. Make sure you have the correct version selected in the upper left of this page.

To create the sample project in Visual Studio 2019

On the menu bar, choose File > New > Project to open the Create a New Project dialog box.

At the top of the dialog, set Language to C++, set Platform to Windows, and set Project type to Console.

From the filtered list of project types, choose Console App then choose Next. In the next page, enter

AMPMapReducein the Name box to specify a name for the project, and specify the project location if desired.Choose the Create button to create the client project.

To create the sample project in Visual Studio 2017 or Visual Studio 2015

Start Visual Studio.

On the menu bar, choose File > New > Project.

Under Installed in the templates pane, choose Visual C++.

Choose Win32 Console Application, type

AMPMapReducein the Name box, and then choose the OK button.Choose the Next button.

Clear the Precompiled header check box, and then choose the Finish button.

In Solution Explorer, delete stdafx.h, targetver.h, and stdafx.cpp from the project.

Next:

- Open AMPMapReduce.cpp and replace its content with the following code.

On the menu bar, choose File > Save All.

In Solution Explorer, open the shortcut menu for AMPMapReduce, and then choose Properties.

In the Property Pages dialog box, under Configuration Properties, choose C/C++ > Precompiled Headers.

For the Precompiled Header property, select Not Using Precompiled Headers, and then choose the OK button.

On the menu bar, choose Build > Build Solution.

Debugging the CPU Code

In this procedure, you will use the Local Windows Debugger to make sure that the CPU code in this application is correct. The segment of the CPU code in this application that is especially interesting is the for loop in the reduction_sum_gpu_kernel function. It controls the tree-based parallel reduction that is run on the GPU.

To debug the CPU code

In Solution Explorer, open the shortcut menu for AMPMapReduce, and then choose Properties.

In the Property Pages dialog box, under Configuration Properties, choose Debugging. Verify that Local Windows Debugger is selected in the Debugger to launch list.

Return to the Code Editor.

Set breakpoints on the lines of code shown in the following illustration (approximately lines 67 line 70).

CPU breakpointsOn the menu bar, choose Debug > Start Debugging.

In the Locals window, observe the value for

stride_sizeuntil the breakpoint at line 70 is reached.On the menu bar, choose Debug > Stop Debugging.

Debugging the GPU Code

This section shows how to debug the GPU code, which is the code contained in the sum_kernel_tiled function. The GPU code computes the sum of integers for each 'block' in parallel.

To debug the GPU code

In Solution Explorer, open the shortcut menu for AMPMapReduce, and then choose Properties.

In the Property Pages dialog box, under Configuration Properties, choose Debugging.

In the Debugger to launch list, select Local Windows Debugger.

In the Debugger Type list, verify that Auto is selected.

Auto is the default value. Prior to Windows 10, GPU Only is the required value instead of Auto.

Choose the OK button.

Set a breakpoint at line 30, as shown in the following illustration.

GPU breakpointOn the menu bar, choose Debug > Start Debugging. The breakpoints in the CPU code at lines 67 and 70 are not executed during GPU debugging because those lines of code are executed on the CPU.

To use the GPU Threads window

To open the GPU Threads window, on the menu bar, choose Debug > Windows > GPU Threads.

You can inspect the state the GPU threads in the GPU Threads window that appears.

Dock the GPU Threads window at the bottom of Visual Studio. Choose the Expand Thread Switch button to display the tile and thread text boxes. The GPU Threads window shows the total number of active and blocked GPU threads, as shown in the following illustration.

GPU Threads windowThere are 313 tiles allocated for this computation. Each tile contains 32 threads. Because local GPU debugging occurs on a software emulator, there are four active GPU threads. The four threads execute the instructions simultaneously and then move on together to the next instruction.

In the GPU Threads window, there are four GPU threads active and 28 GPU threads blocked at the tile_barrier::wait statement defined at about line 21 (

t_idx.barrier.wait();). All 32 GPU threads belong to the first tile,tile[0]. An arrow points to the row that includes the current thread. To switch to a different thread, use one of the following methods:In the row for the thread to switch to in the GPU Threads window, open the shortcut menu and choose Switch To Thread. If the row represents more than one thread, you will switch to the first thread according to the thread coordinates.

Enter the tile and thread values of the thread in the corresponding text boxes and then choose the Switch Thread button.

The Call Stack window displays the call stack of the current GPU thread.

To use the Parallel Stacks window

To open the Parallel Stacks window, on the menu bar, choose Debug > Windows > Parallel Stacks.

You can use the Parallel Stacks window to simultaneously inspect the stack frames of multiple GPU threads.

Dock the Parallel Stacks window at the bottom of Visual Studio.

Make sure that Threads is selected in the list in the upper-left corner. In the following illustration, the Parallel Stacks window shows a call-stack focused view of the GPU threads that you saw in the GPU Threads window.

Parallel Stacks window32 threads went from

_kernel_stubto the lambda statement in theparallel_for_eachfunction call and then to thesum_kernel_tiledfunction, where the parallel reduction occurs. 28 out of the 32 threads have progressed to the tile_barrier::wait statement and remain blocked at line 22, whereas the other 4 threads remain active in thesum_kernel_tiledfunction at line 30.You can inspect the properties of a GPU thread that are available in the GPU Threads window in the rich DataTip of the Parallel Stacks window. To do this, rest the mouse pointer on the stack frame of sum_kernel_tiled. The following illustration shows the DataTip.

GPU thread DataTipFor more information about the Parallel Stacks window, see Using the Parallel Stacks Window.

To use the Parallel Watch window

To open the Parallel Watch window, on the menu bar, choose Debug > Windows > Parallel Watch > Parallel Watch 1.

Cooking dash 3 thrills and spills deluxe download. You can use the Parallel Watch window to inspect the values of an expression across multiple threads.

Dock the Parallel Watch 1 window to the bottom of Visual Studio. There are 32 rows in the table of the Parallel Watch window. Each corresponds to a GPU thread that appeared in both the GPU Threads window and the Parallel Stacks window. Now, you can enter expressions whose values you want to inspect across all 32 GPU threads.

Select the Add Watch column header, enter

localIdx, and then choose the Enter key.Select the Add Watch column header again, type

globalIdx, and then choose the Enter key.Select the Add Watch column header again, type

localA[localIdx[0]], and then choose the Enter key.You can sort by a specified expression by selecting its corresponding column header.

Select the localA[localIdx[0]] column header to sort the column. The following illustration shows the results of sorting by localA[localIdx[0]].

Results of sortYou can export the content in the Parallel Watch window to Excel by choosing the Excel button and then choosing Open in Excel. If you have Excel installed on your development computer, this opens an Excel worksheet that contains the content.

In the upper-right corner of the Parallel Watch window, there's a filter control that you can use to filter the content by using Boolean expressions. Enter

localA[localIdx[0]] > 20000in the filter control text box and then choose the Enter key.The window now contains only threads on which the

localA[localIdx[0]]value is greater than 20000. The content is still sorted by thelocalA[localIdx[0]]column, which is the sorting action you performed earlier.

Flagging GPU Threads

You can mark specific GPU threads by flagging them in the GPU Threads window, the Parallel Watch window, or the DataTip in the Parallel Stacks window. If a row in the GPU Threads window contains more than one thread, flagging that row flags all threads that are contained in the row.

To flag GPU threads

Select the [Thread] column header in the Parallel Watch 1 window to sort by tile index and thread index.

On the menu bar, choose Debug > Continue, which causes the four threads that were active to progress to the next barrier (defined at line 32 of AMPMapReduce.cpp).

Choose the flag symbol on the left side of the row that contains the four threads that are now active.

The following illustration shows the four active flagged threads in the GPU Threads window.

Active threads in the GPU Threads windowThe Parallel Watch window and the DataTip of the Parallel Stacks window both indicate the flagged threads.

If you want to focus on the four threads that you flagged, you can choose to show, in the GPU Threads, Parallel Watch, and Parallel Stacks windows, only the flagged threads.

Choose the Show Flagged Only button on any of the windows or on the Debug Location toolbar. The following illustration shows the Show Flagged Only button on the Debug Location toolbar.

Show Flagged Only buttonNow the GPU Threads, Parallel Watch, and Parallel Stacks windows display only the flagged threads.

Freezing and Thawing GPU Threads

You can freeze (suspend) and thaw (resume) GPU threads from either the GPU Threads window or the Parallel Watch window. You can freeze and thaw CPU threads the same way; for information, see How to: Use the Threads Window.

To freeze and thaw GPU threads

Choose the Show Flagged Only button to display all the threads.

On the menu bar, choose Debug > Continue.

Open the shortcut menu for the active row and then choose Freeze.

The following illustration of the GPU Threads window shows that all four threads are frozen.

Frozen threads in the GPU Threads windowSimilarly, the Parallel Watch window shows that all four threads are frozen.

On the menu bar, choose Debug > Continue to allow the next four GPU threads to progress past the barrier at line 22 and to reach the breakpoint at line 30. The GPU Threads window shows that the four previously frozen threads remain frozen and in the active state.

On the menu bar, choose Debug, Continue.

From the Parallel Watch window, you can also thaw individual or multiple GPU threads.

To group GPU threads

On the shortcut menu for one of the threads in the GPU Threads window, choose Group By, Address.

The threads in the GPU Threads window are grouped by address. The address corresponds to the instruction in disassembly where each group of threads is located. 24 threads are at line 22 where the tile_barrier::wait Method is executed. 12 threads are at the instruction for the barrier at line 32. Four of these threads are flagged. Eight threads are at the breakpoint at line 30. Four of these threads are frozen. The following illustration shows the grouped threads in the GPU Threads window.

Grouped threads in the GPU Threads windowYou can also perform the Group By operation by opening the shortcut menu for the data grid of the Parallel Watch window, choosing Group By, and then choosing the menu item that corresponds to how you want to group the threads.

Running All Threads to a Specific Location in Code

You run all the threads in a given tile to the line that contains the cursor by using Run Current Tile To Cursor.

To run all threads to the location marked by the cursor

On the shortcut menu for the frozen threads, choose Thaw.

In the Code Editor, put the cursor in line 30.

On the shortcut menu for the Code Editor, choose Run Current Tile To Cursor.

The 24 threads that were previously blocked at the barrier at line 21 have progressed to line 32. This is shown in the GPU Threads window.

See also

Dev C++ Debug Watch Array 2

C++ AMP Overview

Debugging GPU Code

How to: Use the GPU Threads Window

How to: Use the Parallel Watch Window

Analyzing C++ AMP Code with the Concurrency Visualizer